While working in a project for one of our clients, I was asked about the difference between sprites (SpriteRenderer) and UI images (CanvasRenderer) in Unity. I didn’t find much information about it so I decided to prepare a presentation in my company to help making it clear. In this post you will find a more detailed version of the original slides I prepared for it. This blog entry will be based on Unity 5.3.4f1.

Sprites are basically semi-transparent textures that are imported as sprites in its texture import settings. They are not directly applied to meshes like textures, but rather on rectangles/polygons (at the end, they are meshes too, so not such of a big difference). Sprites are images that will be rendered in either your 2d/3d scene or in your interface.

1. Usage

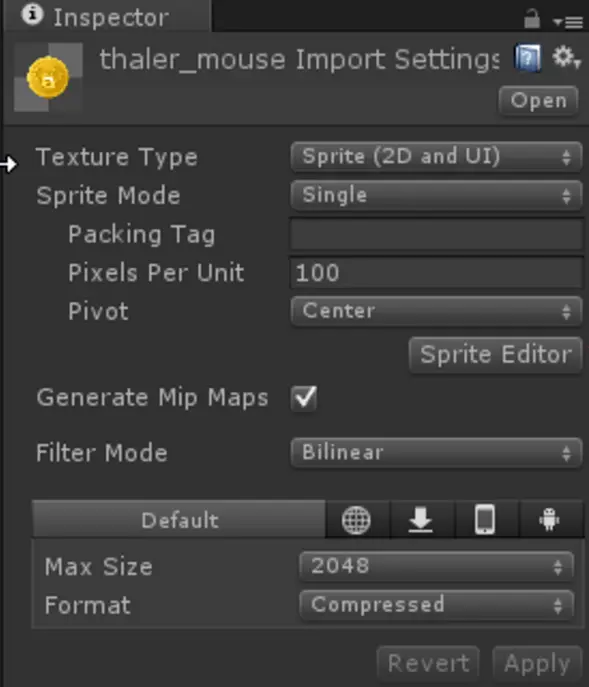

It is straightforward to use sprites in Unity. Just drop the desired image (in PNG preferably) in the assets folder and click on it to access the inspector settings. Mark it as sprite (2D and UI) as shown in the screenshot below.

Now it is time for you to decide between using it as a sprite or as an UI Image. But if you are reading this, you might not be sure which method you want. We will describe the differences in the next section; for now we will just quickly outline how to create both of them.

If you want to use a SpriteRenderer, just drop the sprite from the project view into the hierarchy or scene view. The inspector of the new GameObject will look like:

If you wanted to create a UI Image instead, just right click in the hierarchy and create new UI -> Image. That component requires a canvas, so it will be created if you don’t have one yet. At the end, it will look like:

2. Comparison: SpriteRenderer vs CanvasRenderer

When it comes to the hierarchy, you can place sprites wherever you want in your scene. UI Images, on the other hand, have to be inside a canvas (a GameObject having a Canvas component). You can position sprites just like all other objects through its transform, but images will use a RectTransform instead so as to help positioning the image in your interface system.Sprites are rendered in the transparent geometry queue (unless you use another material than the default). UI Images are also rendered in the transparent geometry queue (Render.TransparentGeometry), unless you use the overlay rendering mode in which case it will be rendered through Canvas.

RenderOverlay. As you might have guessed, it is relatively expensive to draw them on mobile. We will explain later why.

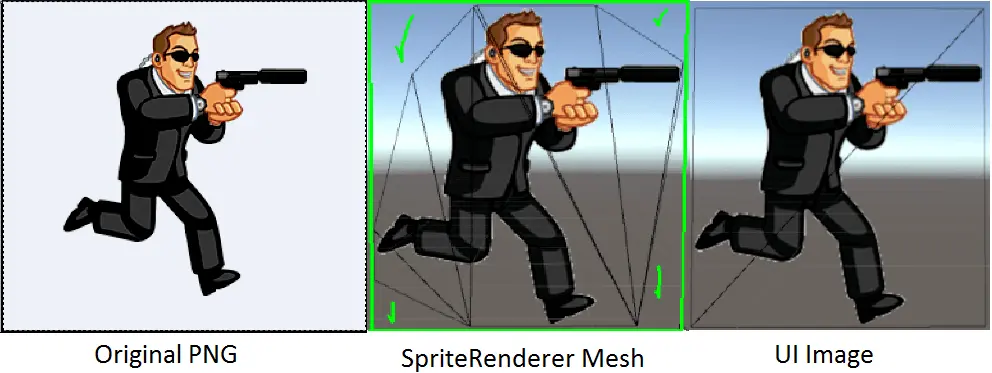

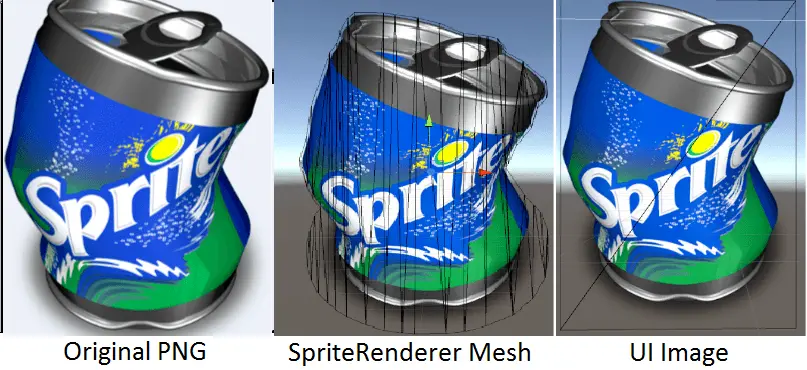

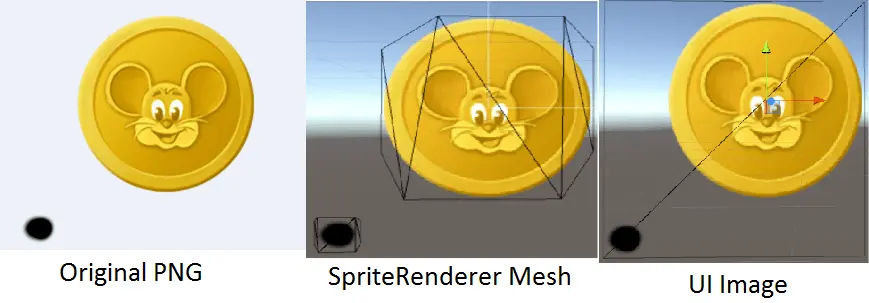

One of the key differences between sprites and images is that sprites support the automatic creation of meshes out of it, where UI images consist always of rectangles. The reason for creating meshes will be explained in the next section; we will see how important it is, as it has an important performance impact.

Lastly, both can be used with sprite atlases in order to reduce draw calls. That’s good.

It might help seeing the differences with concrete examples.

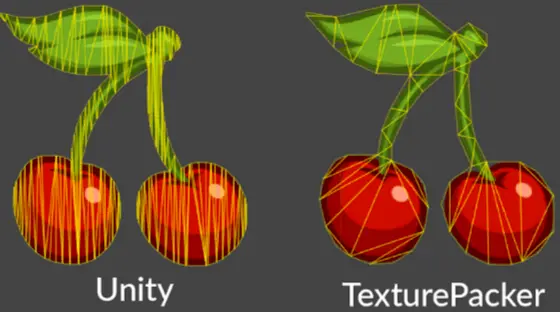

Check the differences between both methods in example 1. UI Image created a tight rectangle that envelops the sprite, whereas the SpriteRenderer created a mesh that better fits the sprite we are rendering. Let’s check another example:

Likewise, the same happened in example 2. But the mesh looks much more complicated now, why is that? Unity tries to fit the sprite the best it cans without introducing too many polygons, so that is the result we get. One could argue if the trade-off is beneficial or not. And what happens now, if we import a PNG with islands, e.g. an image containing different figures separated by transparent areas?

Very interesting results in the third example. SpriteRenderer creates two submeshes, one per island; however, UI Image extends the rect so it covers the whole image. Anyway, is this important?

3. Performance

You guessed it, it’s closely related to performance. It does make a huge difference if you are rendering many of them (like grass in a terrain, or particles). So let us analyse the reasons for it.

Whenever you render a texture, you need to send a command to the GPU driver. You set some information, like the vertices, indices, uv coordinates, texture data and shader parameters and then you make the famous draw call. Afterwards, some nasty things happen in the GPU before the final image is displayed in your screen. A (really) simplified rendering pipeline consists of the following:

- CPU sends a draw command to the GPU.

- The GPU gets all the information it needs for drawing (like copying the textures to its VRAM, if it’s got one).

- The geometries sent are transformed into pixels through vertex shaders and the rasterizer.

- Every pixel is transformed through fragment shaders and written one or several times into the frame buffer.

- When the frame is completed, the image is displayed in your screen so you can have fun playing.

So back to the topic, what is the difference between SpriteRenderers and UI Images? It might seem that sprites are more expensive since its geometry is more complex. But what if I told you that vertex operations are normally cheaper than fragment operations? That is especially true for mobile and semi-transparent objects. But why?

In many engines, including Unity, semi-transparent materials are rendered back-to-front. That means, the farthest object (from the camera) are always drawn first so the alpha blending operations work as expected. For opaque materials it is just the opposite so we can cull objects that are not visible.

Pixel shaders will be executed for every (visible) pixel of the sprite you are rendering, so if you have a huge sprite (in relation to the screen size), then you will execute that fragment shader on many pixels. The problem with transparent objects is that you can not effectively cull them if they lie in your camera frustum, so basically you are rendering ALL semi-transparent objects even if many of them will not be visible at the end. So you find yourself rendering many times the same pixels and overwriting them the whole time in the frame buffer. This issue is commonly known as overdraw. And yes, it is a problem since it wastes memory bandwith and you will quickly reach the pixel fillrate limit of your GPU, something you can’t allow if you are targeting mobile. That is the key point to be understood.

Now, if you did understand it, you will also figure out why SpriteRenderer and CanvasRenderer are so different. The former creates meshes that eliminates unneeded transparent pixels (therefore avoiding executing expensive fragment shaders and reducing overdraw), while UI Image creates a simple mesh that probably provokes a lot of overdraw. There’s always a balance you have to achieve between having complex geometry or living with more fragment operations.

You should always consider using sprite atlases, since sprites are normally large in number but small in size. That leads to having so many draw calls as sprites, and that is not good. Also, image compression works better if you have larger images.

You can easily create sprite atlas through the Atlas Packer. Still, sometimes the automatically created meshes are not optimal and you have no control over it, so you might consider using more advanced software like ShoeBox or TexturePacker.

4. Conclusion

The next time you work with sprites, think about the following:

- If you only have a few sprites, use whatever you prefer. If you have hundreds of them, reread this post.

- Use the profiler and the frame debugger to know what is going on.

- Avoid using transparency. Try replacing sprites with opaque alternatives if your budget allows it.

- Avoid rendering big sprites on screen, as more overdraw will happen. You can easily check for overdraw issues in the scene view by selecting Overdraw as rendering mode. This is critical for particle systems.

- Prefer having a more complicated geometry than a lot of pixels, especially on mobile. Check the result in the scene view by rendering it as shaded wireframe.

- If you need the interface positioning helpers (like content fitter, vertical groups, etc.) go for UI Images.

- To know if you are hitting the pixel fillrate limits, check if performance substantially improves by reducing the resolution of your rendered area.

Let me know if you have questions or would like to point out a mistake. Any other appreciation is also welcome.